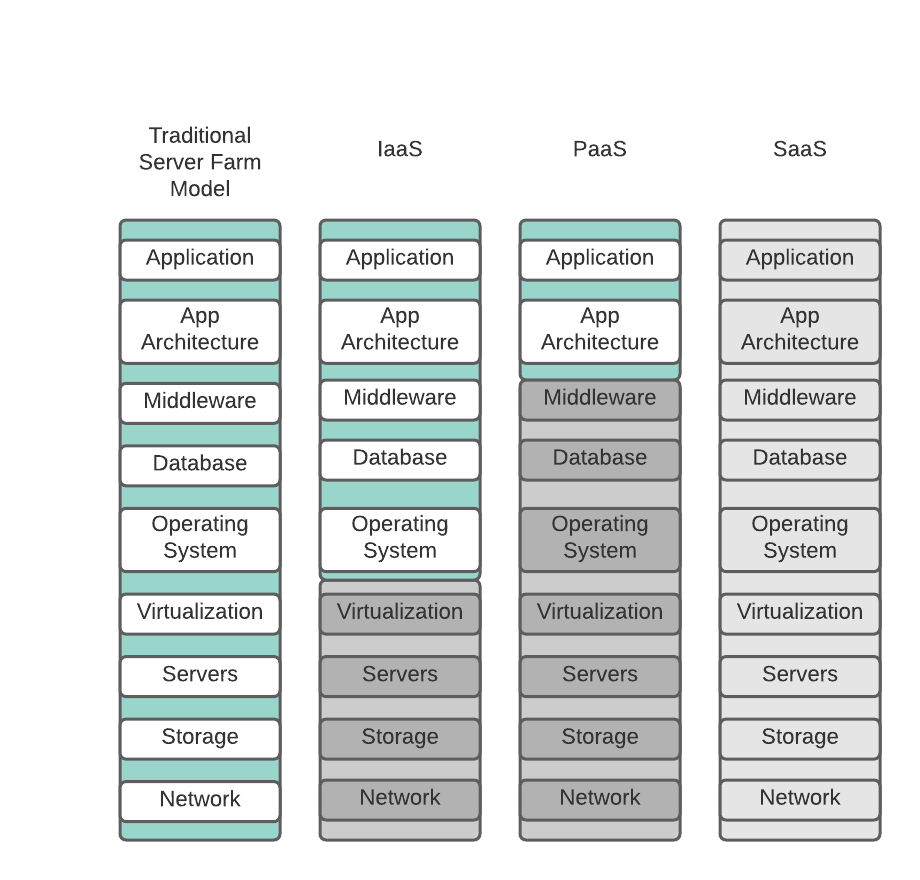

Overview

Most of us work in the Platform as a Service (PaaS) or Software as a Service (SaaS) world where we do not care about what IP address is assigned to a device, what range that IP address came from, or even what version that IP address is. Someone set up the environment, and we live in it. But what if you are responsible for setting up the environment? What if you must establish the port ranges for the containers you will deploy? What if you are troubleshooting a problem and trying to understand where the problem is, and all you are getting are IP addresses instead of hostnames. Or worse, MAC addresses.

Sometimes you have to know more about what is happening inside the system than just the idempotent deploy command. This is not to rewrite the Crab Book, the Cricket Book, or the Snail Book1, but to give you a quick overview of addresses, address schemes, and where you might begin to look if you have problems or have to help set up the environment.

This post will walk through several areas related to IP addressing and some ancillary services necessary to keep the network running effectively.

We begin with an overview of basic IP addressing and the traditional IPv4 structure.

IPv4

Where to begin? RFC1918 is an excellent place to start. The 1996 update to RFC1597 identified and fixed the problems with the original RFC. Specifically, it addressed one key issue:

- The IPv4 space, consisting of only 4,294,967,296 unique addresses, would not be enough to give every computer in the world a valid, routable address.

To resolve this problem, it created three private (reserved) address spaces, which we will cover in just a moment.

But first, let's go back a few more years in history. In the late 1980s and early 1990s, organizations other than universities and the Federal Government requested address space on the Internet, but there was no formal way to assign the space. Of course, there were not that many institutions, so most just self-assigned their addresses, and it never caused much of an issue because of limited routing capabilities. In 1990, we see the first RFC 1174 for IAB Recommended Policy on Distributing Internet Identifier Assignment, and IAB Recommended Policy Change to Internet "Connected" Status which gives rise to a whole bunch of foundational services that we rely on today (including DNS, and IANA). They released the mapping structure for the available IP addresses (IPv4) three years later. These ranges are:

Total Allocated Allocated (%)

Class A 126 49 38%

Class B 16383 7354 45%

Class C 2097151 44014 2%

The Class A portion of the number space represents 50% of the total IP host addresses; Class B is 25% of the total; Class C is approximately 12%. From the RFC:

Class A and B network numbers are a limited resource, and therefore allocations from this space will be restricted. The entire Class A number space will be retained by the IANA and the IR.

But they quickly realized this was not going to work for very long, and RFC1597 allocated the reserved address space and was replaced by what we call the CIDR RFC1918.

But the RFCs do not explain how this relates to networks and the individual machines on those networks, so let’s get practical.

If we start with a Class A network, the RFC says that the allocated range of IP addresses runs from 0.0.0.0 to 127.0.0.0. Because of how things work, this leaves about 126 networks that can be assigned, and each network can have roughly 16 million hosts. Again, there are some limitations to this, but 16 million hosts are a lot of machines. In the old days, companies like Hewlett-Packard had a complete Class A address for their network. This was not a recipe for success, but this is how it was allocated, originally.

Next, Class B networks run from 128.0.0.0 to 191.0.0.0, but because of the netmask (which we will cover in a moment), there are roughly 16383 networks available with about 65,000 hosts per network.

Finally, a Class C network runs from 192.0.0.0 to 223.0.0.0, but again, because of the way it is masked, there are approximately 2 million networks, but with only a paltry 256 hosts per network. This results in more networks to build with but fewer total hosts per network.

If you are paying attention, you notice a gap between the end of the Class C network and the 255.0.0.0 network boundary (because of bit math, the initial IP address ranges went from zero to 255). These missing addresses are reserved for experimentation and other non-routable purposes. Using those addresses (sometimes called Class D and Class E) should be avoided.

Netmasking

If we return to the RFC1466, we learn that they allocate the addresses to Class A, Class B, and Class C. However, as mentioned, the address space allocated is 0 to 255 for each range of the dotted decimal. So the base range is 0.0.0.0 to 255.255.255.255.

But, we have established that the addresses between 0 and 127 are somehow different from the addresses between 128 and 191 and 192 and 223. For this discussion, we are ignoring the addresses beyond 223. The question is how are they different. The answer is their netmask.

Netmasking is one of the more complicated parts of network address management and remains a significant source of routing errors. While today we no longer talk about the class of an address, some basic principles still apply, and the netmask is core to these principles.

As part of the address allocation, what were called natural masks were applied at the bit boundaries. Especially if the first bit of an IP address is 0, and its natural mask is 8. This translates to the Class A network scheme, so using CIDR notation, a Class A network would be represented as 0.0.0.0 /8

If the first two bits of the address are 1 and 0, then the natural mask is 16. This is the same as the Class B address space from 128.0 to 191.0 and is written 128.0.0.0 /16.

Finally, Class C has a natural mask of 24 and runs from 192.0.0 to 223.0.0 and is written 192.0.0.0 /24.

Notice how I have written our example classes above. Class A is written as x /netmask, Class B x.x /16, and Class C x.x.x /24. This tells you that there can be no hosts in the bits that are written.

If you allocate a network 131.0.0.0 /8, you might cause route aggregation issues, but this is entirely valid under CIDR. While today we do not pay as much homage to the address class, there are still certain assumptions as to how addresses are allocated and what the netmask of those addresses might or should be.

Reserved Addresses

In the middle 1990s, we recognized that addresses and address space allocation would be rapidly depleted. Two solutions to this problem were adopted:

- Class A addresses were effectively recalled and reallocated by IANA

- CIDR and reserved address spaces were implemented

The first caused no end of problems within organizations with large computer systems already deployed, especially within the US Federal Government. Today, some Class A networks are controlled only by the US Federal Government, specifically for backup command and control.

RFC1597 introduced an IP management scheme for private networks. Think of it this way. If you are not connected directly to the Internet, do you need to have an Internet routable address? The answer is no. As more and more companies reduced their gateway presence down to one or two key points, the need for each host to possess an Internet routable IP address was no longer needed. But they still needed to be able to connect to their internal systems, servers, printers, etc. So the reserved (private) address space comes into play. Depending on the size of your company, whether you use a Class A, Class B, or Class C address, is dependent on the number of devices and other infrastructure and political decisions.

The reserved address spaces are:

- 0.0.0.0 (default gateway address)

- 10.0.0.0/8 (non-Internet routable private address space)

- 127.0.0.0 (local host)

- 169.0.0.0 (link local)

- 172.16.0.0/12 (non-Internet routable private address space)

- 192.168.0.0/16 (non-Internet routable private address space)

Three of these spaces, 10, 172.16, and 192.168.0, can be used to connect hosts, printers, network-attached storage, or any other IP-connected device to your internal network and routed within your internal network.

Most cloud environments expose all three of these, for internal server and container hosting, with 10.x.x.x being the most common, both in terms of available space for host and the most sliceable via a netmask.

Managing IP address space is both an art and a skill that takes years to learn and master, but understanding the basics of address allocation, masking, and the type of address you are dealing with will enable you to make informed decisions. While the 10 address space gives you the most flexibility for host allocation, some thought needs to be given to how that address space will be sliced and diced. Done improperly, you will experience excessive routing overhead, leading to delay and increased latencies that may not immediately be obvious.

Link Local (169.0.0.0)

We need to spend a moment on the Link-Local address. This particular address comes into its own in IPv6 but has a purpose in IPv4 (although it is more limited).

The link-local address is the first address a node assigns itself if it does not already have a hardcoded address assigned. It is how the workstation calls out to the address assignment system (usually DHCP) and asks for a valid IP address. It is only valid on the local segment, but the node would not be able to come up on the network without it.

If your machine cannot connect and does not have a hardcoded address, if, after your query for its IP address, it says 169-something, you know there is something wrong with the address allocation system. At least under IPv4

IPv6

IPv4, with its various hacks and modifications, has managed to serve the computing world quite nicely as the Internet expanded, then exploded, but even as early as 2000, chinks in the armor (as it were) showed. Further, with an increased number of threats, the protocol's lack of general security meant additional functionality had to be implemented in other services or bolted onto existing kernel processes and stood up at primary and secondary gateway access points.

And then, in 2011, the Internet Corporation for Assigned Names and Numbers (ICANN) officially ran out of IPv4 addresses it could assign to users. Meanwhile, in 2010, the United States Federal Government mandated:

… Federal agencies to operationally deploy native IPv6 for public Internet servers and internal applications that communicate with the public servers.

In 2010, when this mandate came out, most of the government's equipment could not support the IPv6 protocols outside of some of the core routers and some *Nix systems. In fact, aside from Cisco and other routers and some versions of *Nix, IPv6 would not be supported for several years to come.

Oddly, development on IPv6 began in the 1990s. Before the Federal mandate, there were already articles and books on the protocol and how to deploy it, assuming you had access to the right equipment.

IPv4 provided addresses for 4.3 billion nodes, whether those nodes were physical computers or virtual machines. Using Network Address Translation (NAT) and private addresses, we managed to stretch the number of addressable nodes beyond 4.3 billion. Still, even with this trickery, it was clear that the IPv4 space was not going to be enough.

With IPv6, we now have access to some 340 trillion trillion trillion addresses or roughly 6.65 × 1023 addresses per square meter on earth. This partially explains why my oven has an IP address2. If we compare IPv4 and IPv6, in IPv4, we have roughly 2,113,389 networks. With IPv6, the current global Unicast netmask allows for 245 networks with a /48 prefix, or 35,184,372,088,832 networks! In other words, you get a network, and you get a network, and you get a network, and…. There are 195 countries on the planet as of 2025, so each country could have a network with 35,184,372,088,832 networks. There are roughly 1.5 billion people in China, so each person could have their own network. More specifically, each person would be entitled to approximately 24,000 networks if that was how we assigned them.

But there are some other, more useful features that IPv6 brings that are important. Most important of these is the Stateless Autoconfiguration capability (SLAAC). While tools like DHCP have made things more manageable in terms of assigning and managing IP addresses, there is still a lot of manual work that has to be done to ensure the correct setup of DHCP. With SLAAC, that work is removed (we will talk more about Stateless Autoconfiguration in the DHCP section). Another improvement is ICMP (Internet Control Message Protocol), a networker's best friend, which has become more powerful with IPv63.

The other change is in the address makeup itself. IPv4 uses a 32-bit address space, represented by the dotted notation system. IPv6, on the other hand, is based on a 128-bit address space and represented by the colon notation system. We will see an IPv6 address in a moment.

Finally, the IPv6 community has gained some wisdom over the last 20 years in terms of lessons learned in implementing and managing IPv4 and the sometimes slow rollout of IPv6.

Address Types

We did not talk about address types in IPv4, and it generally is not essential, but I will highlight an additional difference between IPv4 and IPv6, which is in the area of Address Types. In IPv4, we have:

- Unicast - A unicast packet is addressed to one individual host.

- Broadcast - All systems on a network are addressed using the broadcast address.

- Multicast - Groups of systems can be addressed using a multicast address.

In IPv6, we have:

- Unicast - A unicast address uniquely identifies an interface of an IPv6 node. A packet sent to a unicast address is delivered to the interface identified by that address.

- Multicast - A multicast address identifies a group of IPv6 interfaces. All members of the multicast group process a packet sent to a multicast address.

- Anycast - An anycast address is assigned to multiple interfaces (usually on multiple nodes). A packet sent to an anycast address is delivered to only one of these interfaces, usually the nearest one.

IPv6 Addressing

One other difference in IPv6 that we do not see in IPv4 is address scope. An address scope can either be global or non-global (link-local). Non-global addresses exist in IPv4 - we call them private or non-routable (that’s non-Internet routable. We explicitly removed them from the legal Internet addresses, but they are internally routable).

In IPv6, the idea of local addresses was built-in to the solution rather than an afterthought. Every IPv6, except the unspecified address, has a scope, which determines the topological span where the address can be used. If you have not used IPv6 and scoped addresses, it can be initially confusing, and you should read up on it before diving into it. RFC 4007 is an excellent place to start.

Now for the fun. This is an example IPv6 address:

2001:0db8:0000:0000:0202:b3ff:fe1e:8329

In certain circumstances, what is nice is that you can shorten the address. The rules are:

A double colon can replace consecutive zeros or leading or trailing zeros within the address.

And

The double-colon can appear only once in an address.

So our address can be written:

2001:db8::202:b3ff:fe1e:8329

As you read addresses, make sure you understand the rules before you start shortening addresses. It is always better to write the complete address than shorten it incorrectly.

Consider:

2001:db8:0000:0000:0056:abcd:0000:1234

If we apply the rules, this address can be represented legally in one of these four examples:

2001:db8:0000:0000:0056:abcd:0000:1234

2001:db8:0:0:56:abcd:0:1234

2001:db8::56:abcd:0:1234

2001:db8:0:0:56:abcd::1234

Each example is a legal representation of the IPv6 address 2001:db8:0000:0000:0056:abcd:0000:1234. Hopefully, this highlights how important it is to understand the rules fully. Note that the double colon can be placed in either the forward-most location or the rearmost, but not both. Only one double colon per address, please.

From an operational standpoint, there should be agreement within the team on how the address, especially the double colon, is shortened to prevent confusion. If in doubt, follow RFC 5952. Also, note that the alphas are always represented as lower case letters4.

Finally, you can represent IPv4 addresses in IPv6 notation5! So if your IPv4 address is:

192.168.0.2

It becomes:

0:0:0:0:0:0:192.168.0.2

Or:

::192.168.0.2

Or:

::c0a8:2

Prefixes, Reserved Addresses, and Netmasking

Remember, we touched on address scope earlier. We are going to skim a bit more.

First, in RFC 4291, we learn about the global route prefix, which is nothing more than the high order bits that identify the subnet or specific types of addresses. This is similar to the Classless Interdomain Routing (CIDR) notation used in IPv4.

Specifically:

Global Unicast: 2000::/3

Link-local unicast: fe80::/10

Unique-local IPv6 address: fc00::/7

Multicast: ff00::/8

It is important to note that any range not listed above and with a couple of exceptions6 is either reserved or unassigned. Currently, The Internet Assigned Numbers Authority (IANA) assigns only out of the binary range starting with 2000::

Some of these exceptions include:

0100::/64: Discard-Only Address block (RFC 6666)

64:ff9b::/96: IPv4-IPv6 Translator (RFC 6052)

2000::/3: Global Unicast Space (RFC 4291)

2001::/32: Teredo7 (RFC 4380)

2001:db8::/32: For documentation purposes only, non-routable (RFC 3849)

2002::/16: 6to4 (RFC 3056)

fc00::/7: Unique-local (ULA) (RFC 4193)

fe80::/10: Link-scoped unicast (RFC 4291)

Remember I mentioned something called the unspecified address? Specifically, this address is:

0:0:0:0:0:0:0:0

Look familiar? In IPv4, it is:

0.0.0.0

This is sometimes called the gateway address. Its purpose is the same in IPv6 as it is in IPv4. It indicates the absence of a valid address and can be used as a source address by a device, at boot time, for example, to get things going (acquire an IP address) or to send I don’t know who to send to packets. Just like 0.0.0.0 should never be assigned to an interface, the 0:0:0:0:0:0:0:0 should never be assigned to an interface.

There is a loopback (127.0.0.1) equivalent as well. It is:

0:0:0:0:0:0:0:1

which is often abbreviated as

::1

You will note that no private addresses are called out in the IPv6 specification. That is on purpose. Remember, the role of non-routable addresses (private) was to expand the ability for companies to stand up large networks when they could not reasonably expect to get access to a large number of routable (real) IPv4 addresses. Most companies are lucky to get one or two routable addresses for their entire business. Sometimes that includes hundreds if not thousands of devices running in the private address space and relying on Network Address Translation to provide them access to the Internet. Because there are so many viable addresses in the IPv6 specification, it was determined that there was no practical need for private addresses (see below).

Link Local and Site Local Scope

IPv6 defined a link-local address scope and a local scope address space. Specifically:

fe80::/10

Like the IPv4 link-local address, it is for use on a single link and should not be routed. Its primary purpose is to enable autoconfiguration mechanisms for Nearest Neighbor Discovery and networks without routers8 to create temporary networks.

The idea of a site-local address was deprecated in RFC3879 due to potential problems. It has been replaced by the Unique Local IPv6 Address, also called the ULA and specified in RFC 4193. This address space allows for globally unique but non-routable addresses. The primary purpose is to facilitate communications within a corporate site or a confined set of networks9. The ULA range is:

fd00::/8

You may find some deprecated address space in the fec0::/10 range. This should be converted if still in use to the newer standard.

Now that we have covered the basics of both of the addresses versions available, let’s look at how the data moves through the various systems and servers and how we allocate IP addresses to devices and find things on the network and the Internet in general.

Ports

IP addresses are responsible for host-to-host communications and packet transfers. Once the data hits the server, it has to be delivered to the correct service. When we combine the IP address and port number, it is properly referred to as a socket. Occasionally you will find port and socket used interchangeably. You may also hear sockets referred to as the interprocess communications methodology for data that does not leave the server. Think about a web server, talking to a backend database that is on the same server.

On most servers, there is usually a set of pre-defined ports, associated with pre-defined services. You can find a list of these in the /etc/services file. Note that any port below 1023 is considered registered and reserved, which means that an application has to be started with root (superuser) privileges (or set UID). Most of these should come as no surprise:

ftp-data 20/tcp

ftp 21/tcp

fsp 21/udp fspd

ssh 22/tcp # SSH Remote Login Protocol

telnet 23/tcp

smtp 25/tcp mail

http 80/tcp www # WorldWideWeb HTTP

Ports from 1024 through 49151 are dynamic ports and may be reserved and need root (superuser) privileges to launch.

In most cases, you do not have to define a port to use, when you are utilizing a well known service on a well known port. For example, we are all familiar with the basic URL format of:

https://www.google.com/

The URL (Uniform Resource Identifier), comprises the protocol (https), the host name (www) and the domain (google.com). Standard stuff. But what if you are running a web server on a port other than port 80? There are a number of reasons to do this, but you have to fiddle the URL. Specifically:

https://www.google.com:8080/

So we see the protocol (https), the host name (www), the domain (google.com) and the port number (8080) after a colon. Similarly, if you were testing without a registered host name, you might use something like this:

https://192.168.1.184:8080/

While most applications use the well known ports to prevent confusion, some sites change these ports as a method of deterring hacking attempts. Once upon a time, that might have been a good idea, even practical, but with the number of levels of security infrastructure wrapped around most networks today, changing the default ports is generally not considered to be a wise decision, but if you elect to do so, usually the configuration file of the application is where you do that.

For the representation of an IPv6 address followed by a port number, the best way is to put the IPv6 address in square brackets, followed by a colon and the port number, like this: [2001:db8::1]:80.

DHCP

Dynamic Host Configuration Protocol (DHCP) was developed to reduce the burden on administrators and operations staff when it came to assigning IP addresses to host. When there were only a few hosts on a network, manually encoding an IP address and the associated information the host might need to communicate with the other hosts on the network. As we added more and more hosts to the network, the exercise of managing and keying in those addresses began to become a burden. When we add mobile devices, laptops, and printers, we have a full-time job for someone. A tedious job, but full-time, at the very least.

Administrators are all about doing as little as possible. They automate as much as they can so they have time to do the actual work. Enter DHCP.

We will not go through the entire ping-pong process of how DHCP works, but we will cover some highlights.

Address Assignment and Data

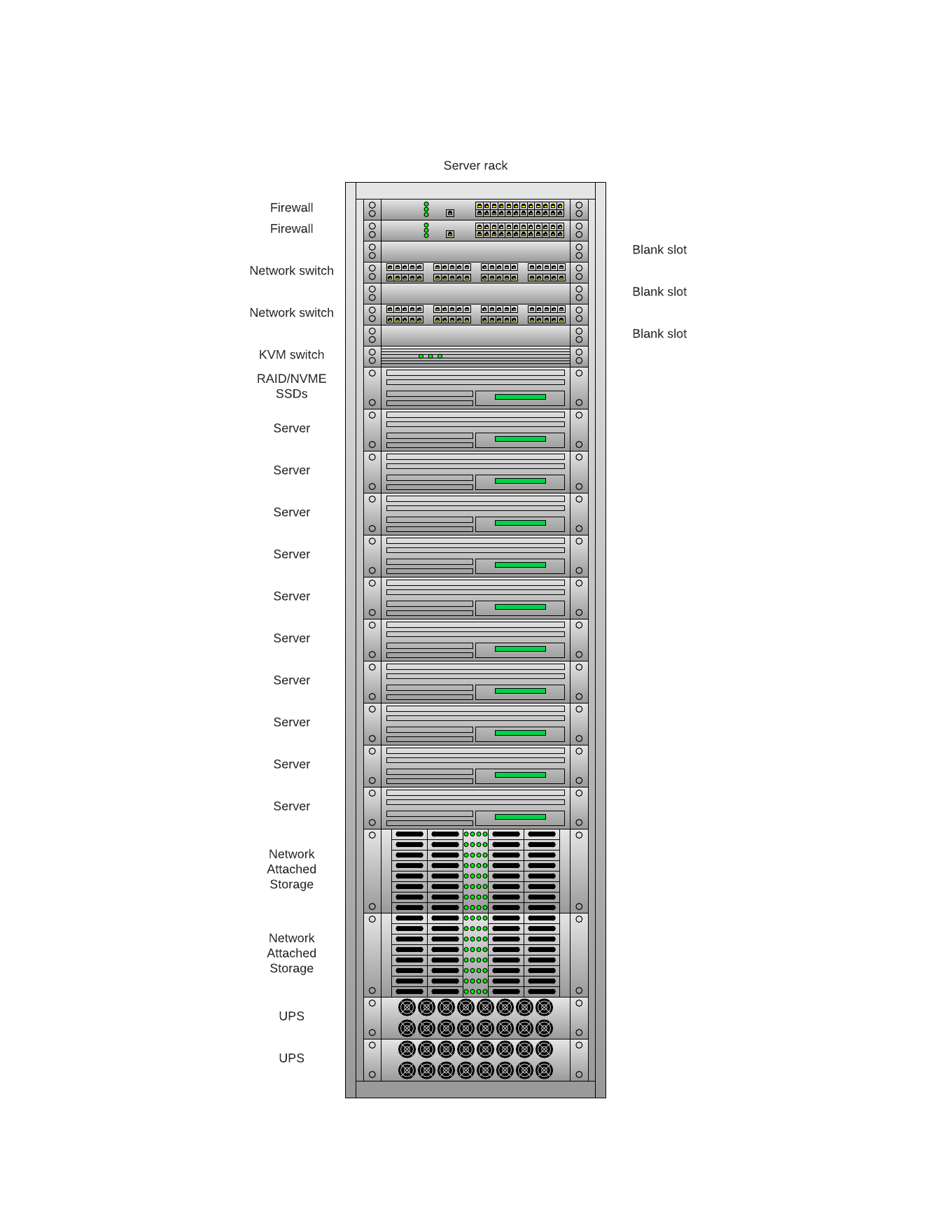

The primary mission of DHCP is to assign an IP address to each host or interface that asks politely for an address. Each network segment should have its own DHCP server. However, a modification to the router allows segments to share DHCP servers (within reason). Ensure there is only one device on the network segment handing out IP addresses. Otherwise, you run the risk of an IP collision, which can be challenging to resolve.

The second mission of the DHCP server is to provide the secondary information most devices need, specifically the netmask, gateway, and DNS server(s). It can also provide the hostname, domain suffix information, NIS domains, etc.

Static vs Dynamic Hosts

Finally, we use DHCP for both static and dynamic IP address assignments. For those machines and hosts that need to have the same IP address all the time, you can configure and key/value pair with the destination’s MAC address and matching IP address. Usually, this would be for critical pieces of infrastructure, such as printers, key servers (DNS, storage, web), and other monitoring devices. Other systems can use the dynamic process to get whatever address is next on the list.

Stateless Address Autoconfiguration (SLAAC)

SLAAC is not technically DHCP. In fact, for IPv6, DHCP has been extended and new features added, but it is essential to discuss SLAAC, and this is the logical place.

Because of the almost impossibly large address space and the number of interfaces that can be allocated addresses, the idea of manually assigning addresses or even building up a DHCP server for all but the essential systems in your network is a Herculean effort. When you have to now assign addresses for every wandering device that might appear on your network, and you want things to be as automatic as possible. The concept of automatically assigning an IP address and assorted information needed to get up and running was not conceptually part of the IPv4 standard, which led to the development of the DHCP server to provide that essential information. IPv6 made sure to incorporate that out of the box and is especially useful for devices with limited processing capabilities such as televisions, refrigerators, toasters, etc. It also eliminates the need for a stand-alone DHCP server in those areas where it would not be convenient, such as the average home.

SLAAC is considered Stateless Autoconfiguration.

Routers that support the network will need some additional configuration. Still, once they are online, the host uses a combination of local information and information from the router to develop its own IP address. Again, if no routers are available, the address will be generated with a link-local prefix beginning with fe80.

You can also combine this with an active DHCPv6 server for greater control. You can also assign a permanent address via SLAAC.

DHCPv6

DHCPv6 is primarily for corporate environments where traceability is needed, unlike the more generic SLAAC. It is considered Stateful Autoconfiguration.

Like DHCP, DHCPv6 can do similar things - hand out the basic configuration information, setup hostnames, etc. One of the requirements for DHCPv6 is that it must support Dynamic DNS. While you can continue to manage DNS manually, DHCPv6 and DNSv6 together mean never having to touch your DNS tables again. And when we are assigning hostnames to hundreds of interfaces at a time, anything we can automate is a good thing. We will cover DNS in the next section.

DNS

Most humans are great with names and not so great with numbers. Computers are the other way around.

In the early days of the Internet, where there were only a handful of hosts out there, someone, or a group of someones, would update their /etc/hosts file and upload it to a common share - usually a newsgroup - for other administrators to install or edit as appropriate. The file is a legacy of early systems and still exists today, even on MS Windows systems, but it is rarely if ever used. A typical modern hosts file looks like this:

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

127.0.1.1 rootPi

This is from one of my Raspberry Pis, which run a Debian Linux distribution. As you can see, the only information in the file is for the localhost and link-local IP addresses.

The nice thing about host files is their simplicity. If I did not want to run something more complicated (like DNS), I could add in the IP address of all the hosts on my network and their associated hostnames, check the file into version control10 and then distribute the host file to my half a dozen machines that might need it11.

This worked when there were a couple of servers. It began to quickly break down when there were a couple of servers per location and it utterly collapsed as the number of servers on a segment outnumbered the amount of time administrators had to configure them. Enter the Domain Name Service (DNS).

DNS had two goals. The first, provide automatic sharing of hostname/IP key/value pairs with all servers that needed to know them. The second was to provide the exact (authoritative) hostname/IP key/value pair for a service when you did not own or control the service. This second part is what launched DNS into its own.

Remember that in the early days of the Internet (the mid-80s), the number of multi-tenant hosts numbered in the hundreds. Most were experimental systems, connected by dial-up connections to one or two other remote systems (as far as a local phone call would reach). Most organizations could only afford a couple of these devices, and they were not all interconnected. Most administrators learned the job on the job, and through tools like the burgeoning Usenet, they pretty much all knew each other and shared tips and tricks daily.

As the networks and interconnected devices expanded and the cost of connecting to remote systems fell, the ability to know your neighbour fell, and a method of distinguishing what service was where became important. Especially as new servers and services were pushed into service weekly12.

As I have eluded, DNS is nothing more than a key/value pair listing of all the hosts in your environment and just your hosts. The rest of the information revolves around how you share the information, who you share it with, and how long it is valid. Modern implementations of BIND (the primary software that runs DNS for most *Nix hosts) support dynamic DNS, including IPv6 addressing.

Dynamic DNS is nothing more than a programmatic ability to push changes in and out of the DNS key/value pair database without human intervention. This, of course, includes a lot of security wrapped around the process.

For your education, a typical DNS entry file looks like this13:

;

; Host addresses

;

localhost.movie.edu. IN A 127.0.0.1

shrek.movie.edu. IN A 192.249.249.2

toystory.movie.edu. IN A 192.249.249.3

monsters-inc.movie.edu. IN A 192.249.249.4

misery.movie.edu. IN A 192.253.253.2

shining.movie.edu. IN A 192.253.253.3

carrie.movie.edu. IN A 192.253.253.4

;

; Multi-homed hosts

;

wormhole.movie.edu. IN A 192.249.249.1

wormhole.movie.edu. IN A 192.253.253.1

;

; Aliases

;

toys.movie.edu. IN CNAME

toystory.movie.edu.

mi.movie.edu. IN CNAME monsters-inc.movie.edu.

wh.movie.edu. IN CNAME wormhole.movie.edu.

wh249.movie.edu. IN A 192.249.249.1

wh253.movie.edu. IN A 192.253.253.1

The essential things to see here are the IN A entries. This indicates the address is an Internet address. Yes, DNS does provide for other types of addresses, but you will seldom see something other than IN X where X is either an A (or AAAA for IPv6), CNAME, which represents an alias or cloak name. This allows a server to have various names, depending on the purpose or migration between one name and another. Two other entries are NS, which explicitly defines the Name Servers on your network, and PTR, which is a pointer record that maps an IP address to hostnames. DNS technically has at least two look-up files, one that maps hostnames to IP addresses (Forward) and one that maps IP addresses to hostnames (Reverse). The complexity of your network will determine the complexity of your Domain Name Service setup. If that becomes your mission, you need to read the Cricket Books14.

The last piece I wanted to cover is how does a customer (client) find a service that is not in their local network. Remember, I said that one of the DNS powers is to find the authoritative address of that service? For example, how does one find out what IP address www.google.com is if you are not actually on the Google network? Why DNS, of course.

First, what IP address exactly is wwww.google.com?

Over time, we developed several tools to mimic DNS calls to ensure that our local DNS worked correctly and our network connections worked correctly. Today, this is considerably less of an issue than in early 2000, but knowing some of these tools can help.

If we execute a dig command against www.google.com we get back something like this:

$ dig www.google.com

; <<>> DiG 9.10.3-P4-Raspbian <<>> www.google.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 22088

;; flags: qr rd ra; QUERY: 1, ANSWER: 6, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;www.google.com. IN A

;; ANSWER SECTION:

www.google.com. 277 IN A 172.253.122.104

www.google.com. 277 IN A 172.253.122.106

www.google.com. 277 IN A 172.253.122.147

www.google.com. 277 IN A 172.253.122.103

www.google.com. 277 IN A 172.253.122.105

www.google.com. 277 IN A 172.253.122.99

;; Query time: 14 msec

;; SERVER: 192.168.1.254#53(192.168.1.254)

;; WHEN: Tue Jan 04 09:47:35 EST 2022

;; MSG SIZE rcvd: 139

Wow. Six servers answer to the name of www.google.com.

Given the size of Accenture how many do we have?

$ dig accenture.com

; <<>> DiG 9.10.3-P4-Raspbian <<>> accenture.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 20512

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;accenture.com. IN A

;; ANSWER SECTION:

accenture.com. 1449 IN A 170.248.56.19

;; Query time: 15 msec

;; SERVER: 192.168.1.254#53(192.168.1.254)

;; WHEN: Tue Jan 04 09:51:09 EST 2022

;; MSG SIZE rcvd: 58

One? We only have one?

Well, not quite. What DNS tells us, is that there is one IP address assigned to answer to accenture.com (Yes, I played a trick on you). If we search for www.accenture.com however, we get:

$ dig www.accenture.com

; <<>> DiG 9.10.3-P4-Raspbian <<>> www.accenture.com

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 3570

;; flags: qr rd ra; QUERY: 1, ANSWER: 6, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;www.accenture.com. IN A

;; ANSWER SECTION:

www.accenture.com. 253 IN CNAME 4229prod2cf.r53cioaccenturecloud.net.

4229prod2cf.r53cioaccenturecloud.net. 3 IN CNAME d13iavm24b37u0.cloudfront.net.

d13iavm24b37u0.cloudfront.net. 39 IN A 99.84.125.65

d13iavm24b37u0.cloudfront.net. 39 IN A 99.84.125.97

d13iavm24b37u0.cloudfront.net. 39 IN A 99.84.125.111

d13iavm24b37u0.cloudfront.net. 39 IN A 99.84.125.50

;; Query time: 66 msec

;; SERVER: 192.168.1.254#53(192.168.1.254)

;; WHEN: Tue Jan 04 09:53:33 EST 2022

;; MSG SIZE rcvd: 200

DNS is very fussy. It expects you to look up a hostname.domainname pair, and remember that the domain name includes the type of domain (.com .org .us). Once upon a time, if you searched for a web server at accenture.com, you would get no results because there is no host named accenture in the .com domain.

Does it matter? Yes. We have gotten lazy over the last decade or so about defining the host we want to attach to when talking about the commercial Web (as compared to the Internet). Other services, like mail, file sharing (sftp), chat (xmpp), etc., could be valid hosts. Internally some servers have databases, file-sharing, or just general business processing logic that need to be addressed and have valid DNS entries of host.domain. Therefore, when you design your DNS, you have to consider this.

In the above example, the address www.accenture.com is actually being served up from many aliased caches hosted at Cloudfront.net, rather than hosted by accenture.com, which could be an alias pointing to something else internally.

But if we go back to the question, how do we get from here to there, because we clearly can, we have to understand how the Internet as we know it today is built.

In the beginning, there were three high-level domains (TLDs): .com .org .net Today, there are hundreds, but let’s focus on these for the moment. It quickly became apparent that someone had to be responsible for centralizing the addresses. Think of the white pages for your phone. And that’s what happened. A couple of root servers were stood up, and all DNS zones were transferred into those servers. That did not last long. Eventually, they created a root server for each of the TLDs. Today, there are 13 root servers scattered around the world. But they do not contain all the DNS zone records. They contain information on how the next server can find the correct answer.

DNS is all about the nearest neighbor. In its algorithm is a value that sets the time to live ($TTL) that says how long a zone file can be retained in a neighbor’s cache. So, when I look up www.google.com, the first place DNS looks is in my local DNS cache. If it cannot find the correct answer there, it looks to my name server’s cache (which for me is actually one of the Google name servers). Bingo, search done. But what about accenture.com? If it is not in my cache or my nearest name server cache, it is passed up to the root server that knows about .com and then down to Accenture’s name service. And you can see that in the examples above.

For the query to www.google.com it took 14 msec to find the entry. I probably would not have seen the request if I had watched my wire because it likely was pulled from my machine’s onboard cache. But for www.accenture.com there is enough elapsed time (66 msec) that my client had to resolve the address, possibly all the way to Accenture’s name service and over to Cloudfront because it was not cached locally.

Lastly, it is essential to remember that when DNS fails, and it does fail, more often than we would like to admit, it can cause catastrophic headaches. More hours have been lost troubleshooting DNS issues than spent trying to figure out IP address conflicts. Most of the major outages in 2021, not attributed to an AWS failure, resulted from DNS issues15.

Service Location Protocol (SLP)

Finally, we will glance over the Service Locator Protocol, a new entry into the how do I find service. Unlike DNS, which focuses more on available, host-level services, SLP tends to focus more locally on those things that are important internally.

Devices use SLP to announce services on a local network. Each service uses a URL to locate the service. Additionally, it may have an unlimited number of name/value pairs, called attributes. Each device must always be in one or more scopes. Scopes are simple strings used to group services comparable to the network neighborhood in other systems. A device cannot see services that are in different scopes.

What is different about SLP is that it is an announcement engine rather than a lookup engine.

Suppose you needed to find an LDAP server but did not know where to look for it. You could dig against ldap.localhost.com and find your local LDAP server, but if you are an application talking to another application, you don’t have time for that. Instead, you rely on SLP to tell you where your LDAP authentication server is. What is critical about SLP is it is designed to support microservice and the ephemeral environments of cloud computing. While Dynamic DNS allows for a number of these features, it still is a pull service. SLP is a push service.

The first significant use of this was for printer discovery16. For example:

service:printer:lpr://myprinter/myqueue

You are a traveling user. You sit down at your field office and need to print a document. This is not your office. How do you find your printer? With SLP enabled, it appears in your list of available printers. You select it and print to it.

Much of the configuration of SLP-enabled devices is done at set up. It can be proprietary or require configurations baked into the application deployed to the container or server, so there is no easy example other than to show the URI as above.

SLP is not new, but it is being rediscovered as more and more services are migrated to more dynamic environments where more automation is required to link systems and services. For more information, you can look up topics like Zero Configuration Networking.

Conclusion

None of the information covered will make you an expert at any of these topics.

However, by understanding some of the plumbing involved in environments, you will hopefully understand where issues can crop up and areas where you could look for things when systems stop working. Further, despite the considerable increase in bandwidth speed over the years, system latency, something we all fight, is still an issue. It takes time for the initial configuration of a host or service to get the correct IP address so it can communicate or the proper name server. It takes time to look stuff up, and route that information to the right service, port, or socket, and it takes time to consume the message, tear it apart and use the appropriate pieces.

It is also essential to understand that sometimes, the problem is beyond our ability to resolve. Much of today’s Internet infrastructure is cloaked in layers of abstraction to make it easier for most people to use. But when you step away from some low-level systems, that abstraction can sometimes lead to programmatic changes that have unusual outcomes. Bugs in code are nothing new, but when a change to a configuration triggers a bug that might not otherwise have been activated, and no human understands what happened, finding a quick fix becomes much more challenging. And sadly, those who understand the plumbing are further and further removed from the infrastructures they once built, sometimes painstakingly by hand17.

It is essential to understand the data flow within your application and between applications to reduce the amount of overhead and ensure the effective delivery of data. Understanding IP addresses and associated services is key to that transfer.

Web Links

- IPv6 Federal Policy

- Service Location Protocol

- IPv6 Reserved Addresses

- IPv6 Multicast Address Assignments

- Current list of Root Servers

Footnotes

- O'Reilly Media has long used animals on its covers to personalize the dry content inside. Thus the Crab Book, the Cricket book, etc. For IPv6, they use no less than three different animals - the Snail, the Turtle, and the Sand Piper. IPv6 is a vast topic area. ↩

- OK, no, it does not explain why my oven or washing machine needs an IP address, but I digress. ↩

- There are also some excellent features around interface addressing that allows unicast and multicast addresses to be set per interface that is not technically possible in IPv4. Still, with virtual machines and DHCP and DNS games, we can achieve the same features. ↩

- RFC 5952 recommends using the following rules:

Leading zeros must be suppressed.

A single 16-bit 0000 field must be represented as 0 and should not be replaced by a double colon.

Shorten as much as possible. Use a double colon whenever possible.

Always shorten the largest number of zeros.

If two blocks of zero are equally long, shorten the first one.

Use lowercase for a, b, c, d, e, f. ↩

- No, I don’t know why you would want to either, but it is for compatibility. ↩

- Reserved https://www.iana.org/assignments/iana-ipv6-special-registry/iana-ipv6-special-registry.xml ↩

- Teredo: Tunneling IPv6 over UDP through Network Address Translations (NATs) https://datatracker.ietf.org/doc/html/rfc4380 ↩

- IPv6 relies heavily on, and in fact, expects there is a router in your network. ↩

- I honestly believe they created this for firewall manufacturers to continue selling a product and for encasing corporate networks. The original standard, designed before firewalls and bad actors, envisaged encrypted machine-to-machine traffic. Every machine had a valid, unique identifier, but when security people discovered that, they said it would not work for them. ↩

- You always put configuration files you change into version control, even if it is just a local git repository. ↩

- I manage my Pi and Beagleboard network with Python Fabrik, making it easy to push out minor changes like SSH keys, configuration files, and updates with a couple of keystrokes to all my devices. ↩

- In the space of a week in late 1999, I pushed a DNS server (the third for my company) into service, six web servers for timesheet entry, a reporting server, two new database servers, and an upgraded mail server. Over two years, our IP address structure changed three times. If it were not for DNS, you would not have been able to find anything. And yes, I was a bit frazzled by the time we were complete. ↩

- Stolen from the Cricket book. I don’t have access to a functioning DNS server at the moment. ↩

- DNS & BIND, DNS & BIND on IPv6 ↩

- The Cloudflare outage triage seems to be an issue of DNS misconfiguration and some BGP routing issues, which only made things harder to fix. ↩

- Most Novell networks utilized SLP under the covers as part of how it handled file shares and server location information, but most people did not know it. ↩

- I will be the first to celebrate the ability to assign IP and DNS names automagically and without human intervention, but I also maintain that every DevOps engineer worth their salt should at least understand how IP addresses work, route aggregation, and basic DNS, if only to be able to rule them out as an area of failure quickly. ↩