I once explained to a program manager the principles of software development with a plate of spaghetti. I am not sure he was convinced, but it got me thinking that a more expansive explanation might clarify things. And one of the nice things about this process is we will have a tasty dinner when we are done.

Let us dive in.

First, we must define some terms (and try not to stretch the analogy too much).

Terms

Ingredients When we talk about ingredients, we mean the raw materials that go into our recipe2. There are several types of these ingredients when we talk about software. First is the actual source code. Think of this as the raw materials. Secondly, we have third-party libraries. Some shops split these into two pieces: the unaltered and the altered libraries3. Finally, there are the pre-built components delivered by other teams. In the case of our recipe for Fettuccine, all our ingredients are pre-built and produced4 from different places, so we have to trust their quality control.

Directions The directions are the instructions on how we put the ingredients together to get what we want. In software, these are our Makefiles5. Just because we have flour, eggs, and oil, it does not guarantee that we will get pasta out of it6 unless we follow the directions. The directions also include additional information for plating and delivery, much like software packaging and delivery. Sometimes this information is included in the base repositories. Sometimes, it is carried separately.

Quantities You will note that our ingredient list includes specific quantities of this or that. If you need to make more, you increase the amount. If you want less, you decrease. In the software world, this is analogous to compiler flags and switches that control various desired outcomes for memory usage, pointers to gateways, and other preconfigured endpoints.

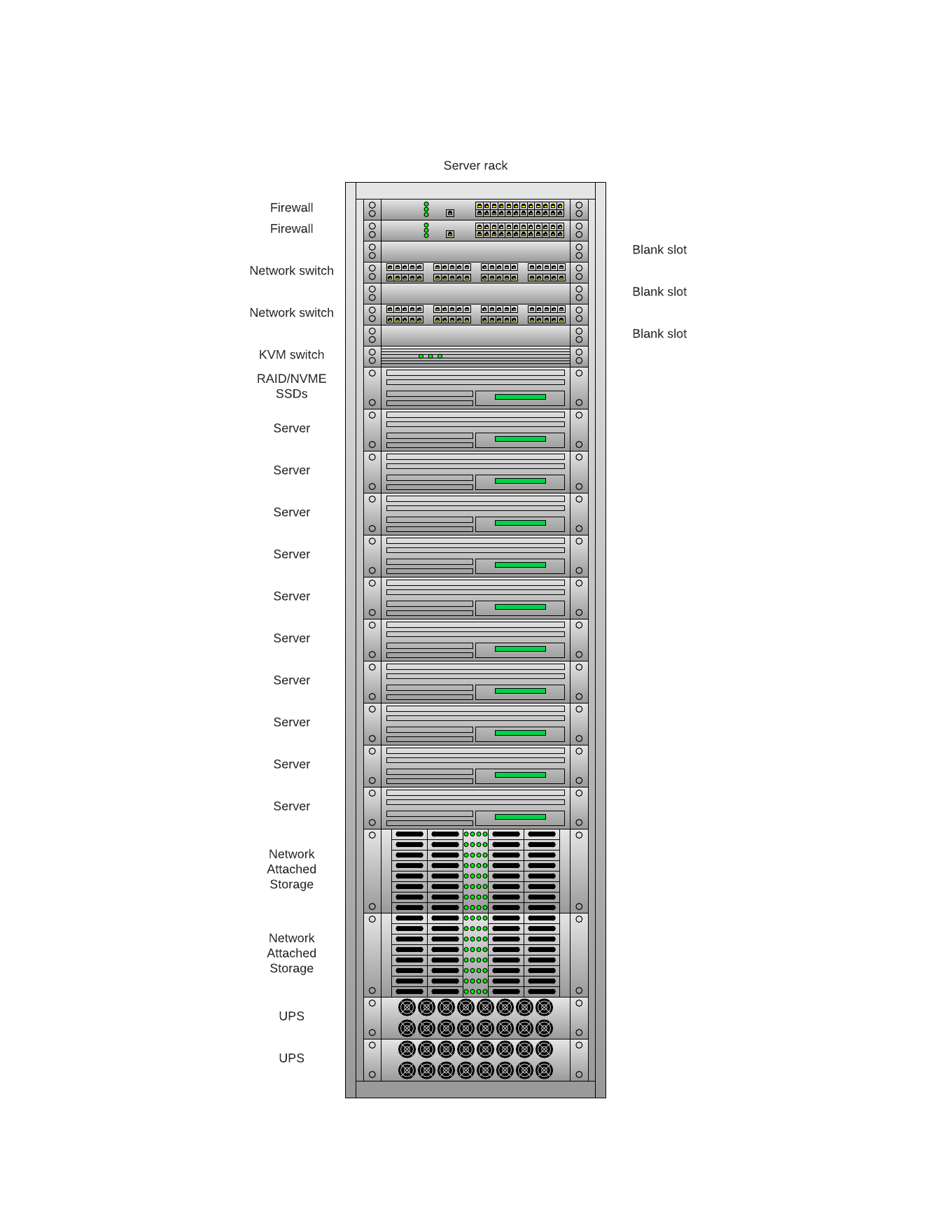

Tools and Equipment Any good chef has their preferred tools. These are their knives, pots, pans, stoves, and fuel sources. The same is valid for software. Build servers, test fixtures, and artifact storage are only some of the tools that will come in handy when you build (cook) your software7.

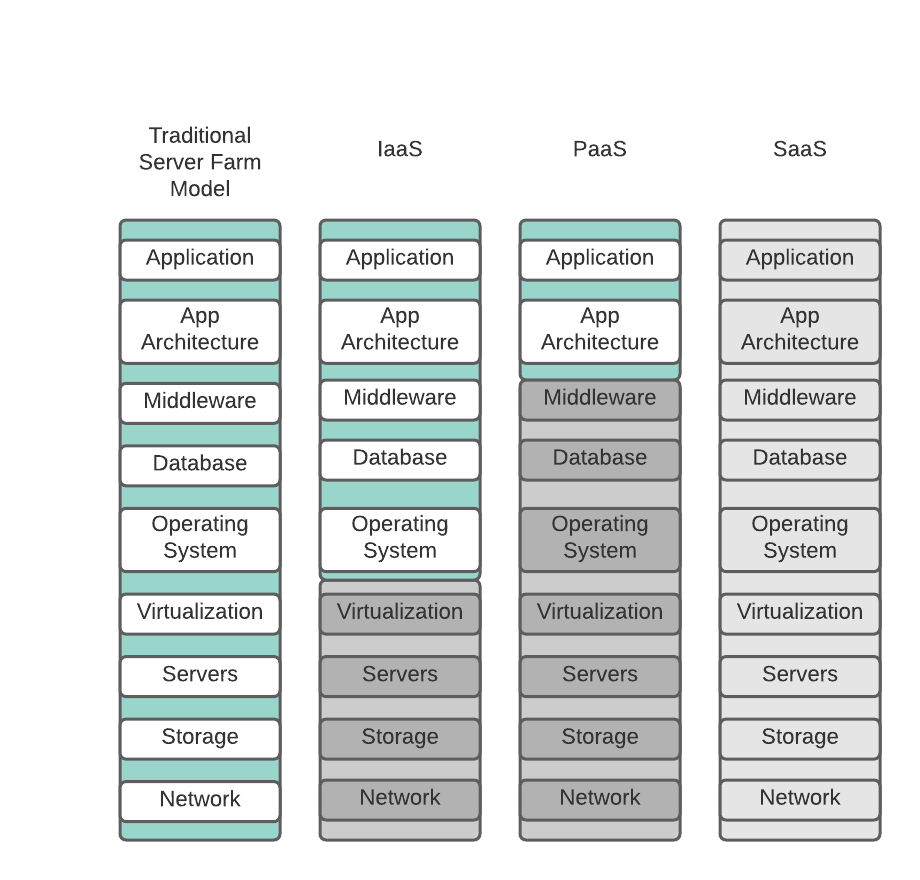

Environment At the end of the day, you need to plate (deliver) your meal. Are you cooking for the family? Cooking to test the recipe? Cooking for a charity meal? These are different environments, even if the end product is the same. The same is valid for software. Regular releases to see if it works would be development, validating that it tastes good would be quality assurance, and serving eight for a charity meal would be production, for example.

The Ingredients

After we initialize our repository (git init), we check in our ingredients (or get out some dishes) with a git commit (or some measuring devices). If we are going to make Fettuccine Alfredo, we need the following ingredients:

Pasta (if we are going to make our own)8

- 3-3/4 cups (590g) pasta flour9

- 4 eggs

- Extra-virgin olive oil

- Salt

Sauce

- 1-1/2 cups(375ml) heavy cream

- 5 tablespoons (75g) unsalted butter

- 1 cup (125g) grated Parmesan cheese10

- 1 lb fettuccine

- Salt

- Pepper

- Fresh grated Nutmeg

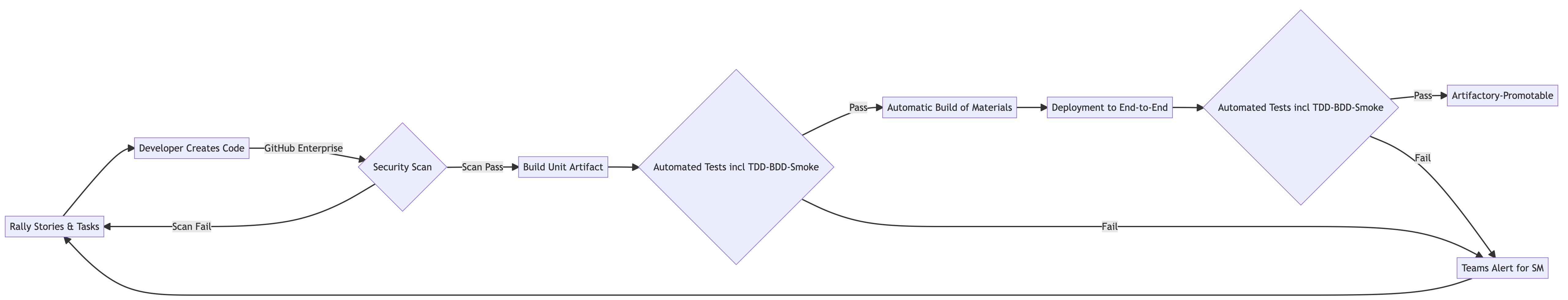

Once we have committed our code to the repository and the automated tests (TDD/BDD)11, along with the other build instructions, we are ready to start cooking12.

The Directions

Now we turn to the build server and push our code into it, and if our instructions are good, we get something useful out the other side. In this case, we will take our pasta ingredients and build some fettuccine noodles.

Pasta

To do this, we take our ingredients and follow the directions. You will want to wash your hands before we begin. Think of it like linting your software. You want to be hygienic.

Place the flour in a mound on a wooden or plastic surface (marble will chill the dough and reduce elasticity). Make a well in the flour and break the egg into it. Add a drizzle of olive oil and a pinch of salt to the well.

Start beating the eggs with a fork, pushing up the flour around the edges with your other hand to make sure no egg runs out, and pulling the flour from the sides of the mound into the eggs.

When you have pulled in enough flour to form a ball too stiff to beat with your fork, start kneading the dough with the palm of your hand, incorporating as much of the flour as you can. You will have a big ball of dough and a bunch of crumbles. Push them aside and scrape the surface clean with a metal spatula.

Sprinkle the surface with more flour, place the dough on it, and knead by pushing it down and away from you, stretching it out. Fold the dough in half and continue pushing it down and away. Keep repeating this action until the dough no longer feels sticky and has a smooth surface. It should take about 15 minutes.

Cut the dough into four pieces. Wrap in plastic the pieces you are not going to work on immediately. Makes about 1 lb.

But this is only the first part of the build. And there are some tests built into the process of making the dough. Validation tasks like like ball too stiff, crumbles on the side, and smooth surface. Just like building software, these unit tests help you evaluate the quality of the dough, just like unit tests help us assess the quality of the code early. By doing unit tests, you can quickly ascertain the quality of the code and fix any issues before they become too hard to resolve. We call this shift left.

Our wrapped dough is now an artifact, and we can check it into our repository (put it on a shelf or in the refrigerator, depending on how long you expect to process the first piece).

But a blob of dough is not a finished product, so we need to process it a bit more.

Make the Fettuccine

Making Fettuccine by hand is a time-consuming process. You can do it manually or use a machine (automated). Let’s roll it out.

Personally, I prefer to use a machine. My build server is a Kitchen Aide with a pasta roller attachment. You are going to need some additional items:

- Parchment paper

- Extra flour

- Lots of extra space to lay out the pasta to dry

Set your machine to its widest setting and run one piece of dough through it two or three times. Flour the dough lightly if it starts to stick. Fold the dough into thirds, reduce the width by one setting, and start again. You will continue reducing the width each time. You may need to cut your dough if it becomes unwieldy (it will). Place it between parchment when you are not actively working with it. I find that on my machine, for fettuccine noodles, I have to squish it down to setting 5. You may have different results.

Again, like software, as you manipulate your package, you will have to experiment. What is the expected outcome? How do you adjust the flags and configurations to achieve that desired outcome? Until you have done it once or a dozen times, it requires experimentation (development).

You can create your pasta by hand or use a machine. Again, I find the machine to be more helpful. Once you have the dough to the desired thickness, cut it into strips, set them on the parchment, and set them aside to dry. You can also trim them to the same size or square off the bottoms, or, if your timing is right, you can toss them in a pot of boiling water and cook it.

I usually make my pasta the day before, so I let it dry before cooking. Again, we have an artifact for our repository. In this case, a finished set of pasta noodles (binary) is ready for the next step in the process.

The Alfredo Sauce

Fettuccine Alfredo is a two-step process. The pasta (repo pasta) and the sauce (repo sauce). Or if you are using packaged pasta (archive pasta). You can use packaged sauce too, but we will do it from scratch. I think it tastes better.

First, we must cook the pasta (build cooked pasta). Bring a large pot of water to a boil.

We must also get the sauce going (build sauce). Bring the cream and butter to a boil in a large saucepan over high heat. Reduce the heat to low and simmer for about one minute. Add six tablespoons of the grated Parmesan and whisk over low heat until smooth, about one minute longer. Remove from the heat and season with the salt and pepper to taste with a generous pinch of nutmeg. (Be judicious with the salt. Parmesan is salty enough by itself when it is fresh).

Generously salt the boiling water, add the pasta, and cook until al dente, 1-3 minutes. Drain the pasta well.

Put the pasta in a warm, large, shallow bowl. Pour on the sauce and sprinkle with more cheese. Toss well and serve immediately.

Again, we have several checks along the way (test steps) that we can validate.

Delivery (Plating)

The instructions serve immediately do not tell us anything about how we are going to serve our meal.

If this was a development environment, we might just scoop a forkful out of the bowl and see how it came out. For a quality check, we might serve it to our family by scooping it out onto the day-to-day plates, putting it on the table, and pouring a lovely Chardonnay to go with it. But for production, we might get out the good china, serve each guest their own and ask them if they want more cheese on top.

It is important to note regardless of how we plate (deliver) our meal, there are no changes in the basic ingredients or build process. How it is delivered, small batches to validate, larger batches for user acceptance or in quantity for eight, is the same.

And that is what is essential about software delivery. Regardless of the environment we deliver to, we must use the same ingredients, tests, build and deploy processes each time.

This ensures that what we deliver is the same each time. Of course, when you cook, some variables impact the outcome. A pinch of salt might be larger or smaller each time. This is not the case in software development, where every measure is the same, and there are no variations between one build and another unless you change the underlying recipe.

Buon Appetito!

- Recipe based on Fettuccine Alfredo and home made pasta from William-Sonoma’s Pasta book ↩

- Recipes are nothing new to software development. The Orchestration tool Chef long used recipes to describe their installation procedures and other culinary aspects to define their tools and other processes. ↩

- The difference is using the thrid-party library without making changes, and the altered libraries have some change made to them after they have been downloaded. In some cases this could be a patch, in others, this could be a change in the underlying source code. ↩

- Pre-build in this case means someone else is providing them to us. I am not a wheat farmer, so I am not growing farina in the back yard. Similarly, I am procuring my eggs and oil from somewhere, and I am certainly not in Italy, so my cheese is made by yet someone else from their list of ingredients. ↩

- To use the C definition. ↩

- In fact, if we add yeast and water, we will get bread. ↩

- Build servers include Jenkins and Team City, test fixtures include SonarCube, and Jfrog is one of the many artifact storage engines out there. ↩

- If you are not going to make your own, use a good quality fettuccine noodle, the fresher the better. ↩

- Sometimes called 00 Farina or Semolina flour. You can use any good quality flour you have if you do not have pasta flour. ↩

- If you can grate it off the block, so much the better. ↩

- Test-driven development (TDD) is the unit tests and linting that should precede any code creation. Behavior-driven development (BDD) is the functional (acceptance) tests need to close out the story and accept it. ↩

- We will discuss automated testing methodologies in another post. ↩